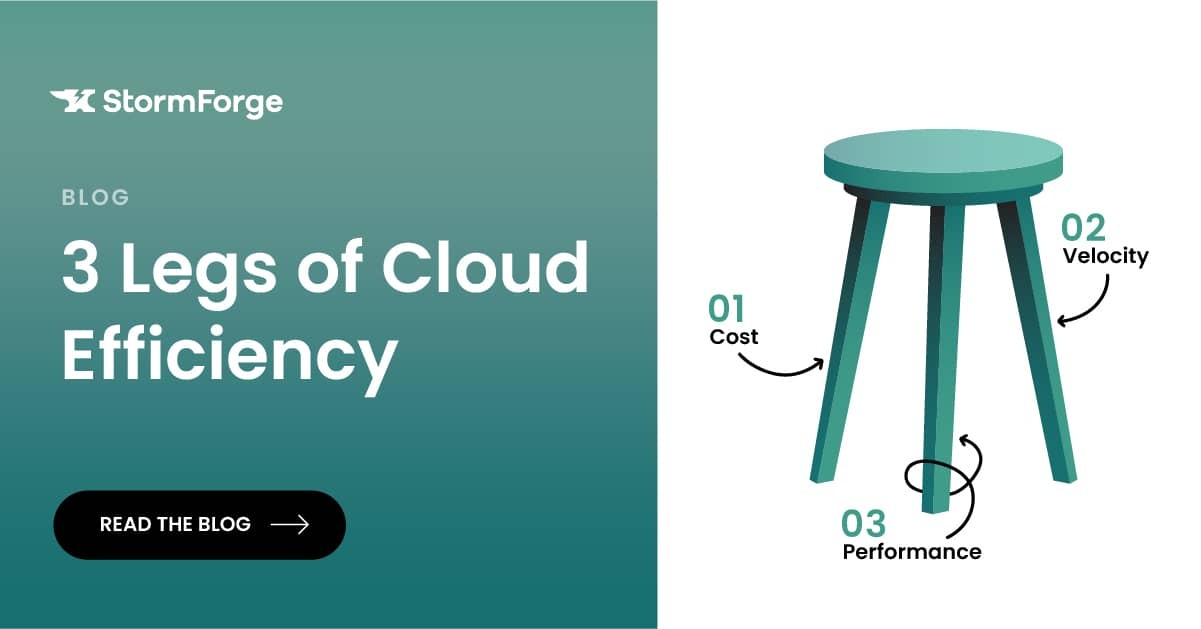

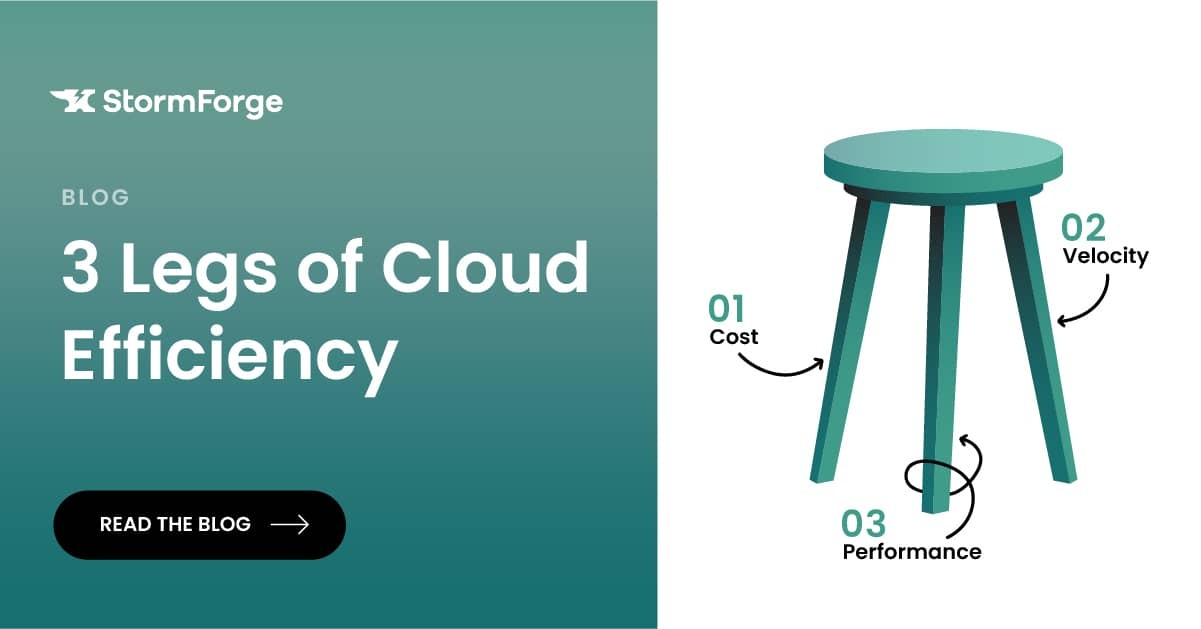

When thinking about achieving cloud optimization, it’s typically related to a discussion around cost. However, cost is just one leg in a three-legged stool. This article will better define cloud optimization and its three components — cost, performance and velocity — and provide suggestions for achieving cloud optimization in all facets. Additionally, I’ll address the “elephant in the room” known as Kubernetes and how its inherent complexities can be overcome as well. First things first: History and context are needed to understand some of the root sources of inefficiency in the cloud.

In the “old” days before cloud, budgeting and purchasing for on-prem data centers required buying stuff such as hardware and software licenses, etc., upfront and then installing them, which required a long lead time, typically three months or more. Central financing controlled this approach. With the cloud, that model has changed drastically. First of all, it’s on-demand, so you only pay as you use it. It’s also more dynamic, in that you can turn things on and off at will. Second, it breaks up the central finance purchasing model. Cloud allows individual developers to spin stuff up or turn it off, all without finance even knowing. Its dynamic environment is a big cultural shift for the organization. It also comes with increased complexity. For example, you’re not only picking between four different node types and four different node sizes, you’re also picking exactly the fraction of each node to be used for CPU and the fraction for memory. You’re sharing all the resources between the different nodes, which makes a hard problem even harder.

The dynamic nature of the system makes this all so complex. If you look at the cloud providers, their billing processes are very elaborate. What node type do you want? Do you want spot instances, on-demand, reserved instances? How much are you paying upfront? How are you blending reserved instances and on-demand instances? Scaling is an important aspect to consider because you scale up for performance by adding resources before you need them to avoid latency spikes. However, if you scale up for performance, then never turn it off, you’re just provisioned for big cloud, which is the antithesis of what you should do.

As a result of this cloud complexity, typically people will default to either over-provisioning the cloud or manually tuning via trial and error. Here’s the trap: having to choose between efficiency in terms of costs or hurting customer performance. A big cause of this trap is lack of visibility. Beyond cost and performance tradeoffs there’s also velocity. You may be able to achieve performance and cost efficiencies, but it’s going to take a lot of tough manual work. And that’s effort you’re not putting into shipping features.

Try StormForge for FREE, and start optimizing your Kubernetes environment now.

The first step to achieving cloud optimization is to understand the problem. Understand where you’re spending money and decide if it is providing the desired return on investment (ROI). That understanding goes beyond looking solely at dollars spent; in some cases, lowering the cost is not the top priority. Just because an engineering team is spending 10x more than another team, is that really a problem or is it justified? Are they supporting more traffic? Are they over-provisioned deliberately so the team can move faster and get a new product to market? Understanding your spend and making sure you’re getting the return on investment may require a huge effort, but it’s definitely your first step, even before optimization. For example, a team may decide to initially over-provision for stability and, while expensive, there’s not much production load and the priority is getting the product to market. Later, once the product is in the market, has much more load and the costs are ballooning, put the engineering time in to lower the costs while not sacrificing performance.

Second, identify clear places to win. For example, find instances that are orphaned — someone started it, forgot about it, left the company and now it runs indefinitely. It’s a surprisingly common problem and probably one of the easiest ways to cut costs. If cutting costs is the aim, you have two basic choices: Either use less or use the same resources, but pay less for them, such as with AWS savings plans. Eventually, you have to go in and start right-sizing the workload, which requires visibility. There are cost management vendors that will identify your cloud spend and allow you to shut down any idle resources.

With respect to visibility, part of the reason cloud costs get out of control is because nobody notices. That doesn’t mean engineers don’t care, they just don’t know. Most engineers, if shown, will actually try to fix it themselves. It’s called the Prius effect. A study revealed that it was observed and documented that a large subset of Prius drivers would respond to data visibility about the vehicle’s battery power by driving in a manner that decreased fuel consumption. If developers are conscious of their cloud usage and spend, they will try to improve it.

Bottom line: Cloud optimization goes beyond cost. Cloud optimization should be defined as using the resources you request to the best of their ability. It’s factoring in performance and costs. It factors in the time required by a developer to do the manual maintenance required to manage cloud resources. True cloud efficiency is about having the right resources at the right time.

With the rapid adoption of cloud in the enterprise, cloud optimization considerations are paramount and need to go beyond rudimentary analysis of cost. Organizations must consider the other two legs of the cloud optimization stool, performance and velocity, if they hope to get the most out of their cloud infrastructure.

We use cookies to provide you with a better website experience and to analyze the site traffic. Please read our "privacy policy" for more information.