Blog

Choosing Open or Closed Workload Models for Performance Testing

By Sebastian Cohnen | Jan 06, 2021

Blog

By Sebastian Cohnen | Jan 06, 2021

There are many differentiating properties when it comes to load and performance testing tools. The general workload model is one aspect that is often overlooked. However, tests that run using the wrong workload model can vastly underestimate latencies and provide a false sense of security. In the following we explain what workload models are and we shed some light on why we believe that StormForge is using the “right” approach for many, if not most cases.

Workload models are a topic that we talk about a lot when giving presentations, making product demos or onboarding our customers. We think it is essential to have a basic understanding about this for having good and realistic performance tests.

Workload models at first sight are a boring, apparently “theoretical” topic. As with many theoretical topics it turns out that workload models have a very substantial impact in practice. Workload models greatly influence what you are actually testing and are probably way more relevant than one might think. It also explains why you get vastly different results with different performance testing tools (because they implement different models). I’d also argue that this fundamental principle is often simply overlooked when choosing a tool to run any kind of performance test.

Workload describes a unit of work that is being executed, e.g., by a simulated agent and is usually a series of requests and other steps. This could be one or more business transactions like “load start page”, “search for product”, “add to basket”, “begin checkout”, …

Workload models describe the basic principle how a defined workload is executed in order to perform a performance test. In this article, we want to differentiate between open and closed workload models.

In a closed workload model, you define a fixed number of concurrent agents, isolated from one another, each performing a defined sequence of tasks (the workload) over and over again in a loop. There may be a pause between iterations, but that is not important for this article.

Here is some pseudo code to give you an idea how a closed workload tool works in principle:

for 1 to $concurrency do fork do while do executeWorkload() sleep($iterationDelay) end end end

A typical, well known and very simple example for a closed model is Apache Bench (or ab for short). With ab you basically state how much concurrency you want and what you want to hit and it will try to perform those requests as fast as possible. JMeter is another example of a closed model load generator.

In an open workload model, you have a defined rate of arrival at which new agents are spawned. Agents are isolated from one another, performing a defined sequence of tasks and are terminated when they have finished. Started agents are independent from one another and new agents are launched regardless of the state of currently active agents.

Again, here is an example in pseudo code how an open model implementation looks like:

while do fork do executeWorkload() end sleep(1/$rateOfArrival) end

StormForge uses an open workload model with the ability to mimic a closed model¹. Another example of an open workload model is tsung (which we’ve been using internally for many years).

One obvious difference between the models is that closed workload systems are easier to reason about: You know the number of agents in the system beforehand (it’s constant). For open models this number is a function of Little’s Law. ²

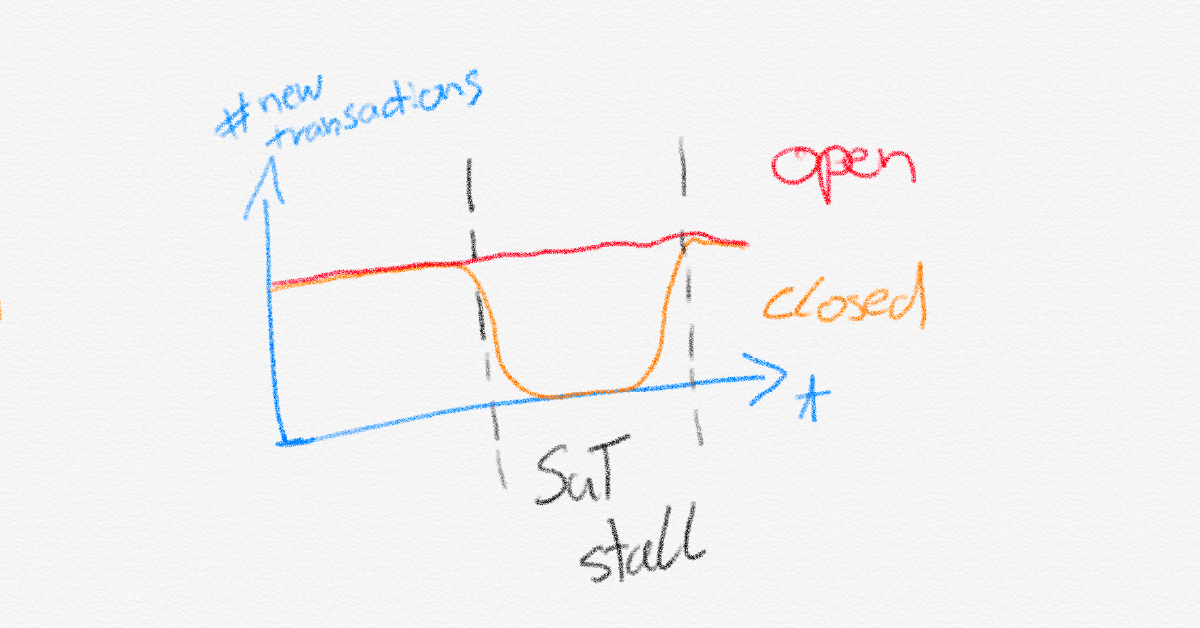

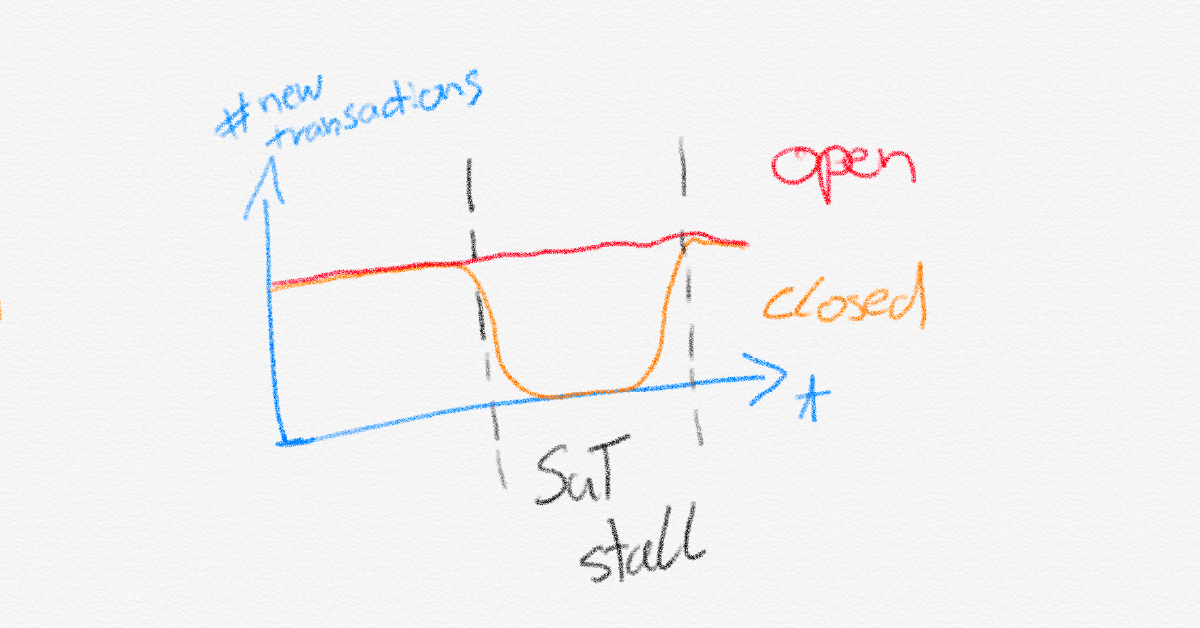

To quickly re-iterate: Closed model systems have a fixed number of agents executing workloads. New work is only scheduled for an agent, when it is done with the previous one. This leads to a fundamental drawback: The system under test (SuT) coordinates the test itself. If the SuT is slowing down or stalls, then the entire test is impacted. During this time, all agents waiting for responses also stall, no new requests are made and load is taken away from the SuT which in turn allows the system to recover. Currently active agents in an open model system would also be impacted, but the crucial difference is, that new agents continue to arrive at the system. This keeps the pressure up at the target and better mimics real world situations like marketing campaigns: A slow shop experience will not stop customers from clicking ads, hitting reload or opening newsletters.

Imagine what happens to the rate of new transactions per time when the System under Test has a hick-up:

There are also other issues regarding closed models, like the “Coordinated Omission Problem”, a term coined by Gil Tene.3

There are also other issues regarding closed models, like the “Coordinated Omission Problem”, a term coined by Gil Tene.3

True open models only exist in theory though. Resources are limited and there are practical upper limits on the number of active clients, which is technically unbounded. In case you are hitting resource limits with your open model tests, they should be considered inconclusive, discarded and repeated with more testing resources (or with a lower traffic model). This is the main reason why it is critically important to monitor the test itself closely which is what StormForger does automatically for every executed test.

There are good reasons to use open and closed workload models. It is important to know the difference though.

We at StormForge believe that the open workload model is the one you probably want to use for many scenarios. It reflects better what happens in real world situations and it is better suited to detect problems with your system under test in many cases. Open models are harder to reason about but it’s worth it to ensure your load tests are realistic and your system can perform in production as expected.

We use cookies to provide you with a better website experience and to analyze the site traffic. Please read our "privacy policy" for more information.