“History doesn’t repeat itself, but it often rhymes.”

– Mark Twain

Who quotes Mark Twain to begin a tech blog post?

Well, it turns out that technology history rhymes just as well.

In this case, I’m referring specifically to application infrastructure and a cycle of innovation and waste that has existed for my entire career.

For as long as I can remember, infrastructure velocity has been a leading indicator for competitive differentiation. For successful organizations, IT (and specifically IT’s ability to deploy and manage infrastructure quickly and efficiently) is a difference maker. While it’s true that “software is eating the world,” it turns out that software can’t run by itself.

For as long as I can remember, infrastructure velocity has been a leading indicator for competitive differentiation. For successful organizations, IT (and specifically IT’s ability to deploy and manage infrastructure quickly and efficiently) is a difference maker. While it’s true that “software is eating the world,” it turns out that software can’t run by itself.

This persistent chase for velocity has fueled some of the greatest technological innovations in delivering infrastructure. Predictably, each boom in velocity serves as a pressure test, quickly uncovering weak points in any IT organization and serving as inspiration for the next cycle of innovations.

We need only to look at recent history to find examples.

While virtualization has existed in some form for nearly 60 years, it was just the early 2000s when virtual machines debuted in the enterprise and it would be less than a decade before they revolutionized expectations for infrastructure delivery and management.

By the late 2000’s, rapid provisioning of virtual servers boosted the efficiency of infrastructure teams, but also led to a new set of phenomena. Specifically sprawl (the tendency for easy provisioning of resources to result in significant waste) and over-provisioning (the overcommitment of resources in a shared environment to ensure that critical applications do not suffer a loss in performance or uptime).

In response to these new challenges, a wave of monitoring, alerting and analytics technologies popped up at the end of the 2000s.

Unfortunately, for many a pre-cloud organization, the only real safety net in this paradigm was that there was a physical limit to the size of your environment.

At some point, your infrastructure became “full” — prompting teams to curb waste.

Then came…“the cloud”

The public cloud lifts the proverbial lid on the enterprise data center — exponentially expanding its access to resources, but also the likelihood of waste.

Where virtual server environments from a decade ago might have had thousands of virtual machines spread across a small handful of geographic locations, an organization shifting to the public cloud and Kubernetes can quickly see even more virtual machines hosting tens of thousands of containers spread across several providers and/or geographic locations.

One would do well just to tread water after jumping — or in some cases being pushed — into the deep end of the cloud pool. Pun intended.

Prioritizing velocity in a software-driven economy and without mature observability and resource optimization capabilities, many organizations shifting towards cloud assume that sprawl, over-provisioning and rising costs are simply the price to pay to compete.

Importantly, the shift to the cloud also serves as an inflection point for many organizations. Waste is amplified in the cloud, with many organizations now saying that reducing cloud waste is a top priority.

So as IT professionals, how do we simultaneously prioritize velocity, quality, performance, and cost control?

Earlier on, I described innovations that boost velocity as a quick way of uncovering weak points within an organization’s operational framework. I also described how technologies crop up to meet and resolve these challenges, and that this boom/break/fix cycle has perpetuated over time.

That said, there’s reason to believe that this cycle will be different.

New operational models and maturing technologies like machine learning promise to make sense of the complexity, help humans make better decisions, and make it easier to understand and manage both capital and operational costs.

From FinOps (an operational model where cross-functional stakeholders partner together using both processes and tooling to understand and manage costs) to AIOps (the concept of an AI using machine learning to parse log data and predict adverse events and even mitigate risks before adverse events occur), it’s clear that this next decade will see even more changes than we saw over the past two decades.

But many organizations aren’t prepared to adopt these new approaches and technologies quite yet. Often wrestling with both the intense pressure to shift legacy applications to the cloud, while also managing the growing complexity and costs of the applications that have already made the transition, there must be a way to find relief without waiting for the promise of either FinOps or AIOps.

Recent history has already given us a glimpse of this shift towards machine learning-driven solutions. Hardware infrastructure vendors in the storage market, for example, have been implementing ML to predict impending component failures and even forecast resource constraints for a decade. This proverbial “storage infrastructure crystal ball” has served to effectively deprecate the role of storage administrators in many organizations.

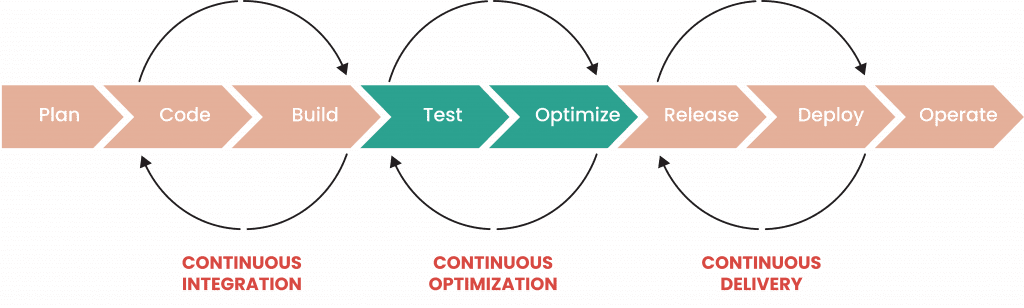

As organizations shift to using Kubernetes, it seems obvious that one way of pre-empting waste and controlling costs while ensuring availability and performance for applications (aka: The Unicorn) is to implement ML-driven testing and optimization within software development and delivery models like CI/CD.

This approach creates an “application optimization crystal ball”, both recommending fixes to inefficiencies in already-deployed applications, as well as serving as a quality gate ensuring any new deployments meet the business’ intent.

Kubernetes, rife with complexity and with no shortage of observability challenges, is a perfect place to apply ML.

In fact, combining the immense challenge with a market-wide skills shortage, deploying Kubernetes at scale without ML is a recipe for certain disaster.

So as we seek to break the rhythmic cadence of boom/break/fix, Kubernetes — a word with no rhyme — represents a fitting milestone to end this cycle.

StormForge solves this multi-dimensional predicament with a combo of scalable load testing-as-a-service and ML-driven rapid experimentation, to tune application parameters in Kubernetes to align with the business’ intent — effectively solving for problems before they occur and most importantly before customers are impacted.

Regardless of your organization’s maturity level with either AIOps or FinOps, StormForge can help provide answers quickly and without the need for historical data analysis or the arduous labeling associated with other machine learning technologies.

We use cookies to provide you with a better website experience and to analyze the site traffic. Please read our "privacy policy" for more information.