Chapter 4 - AKS Karpenter: A Deep Dive and Best Practices

Kubernetes offers resource management and autoscaling capabilities that can be enhanced by third-party tools to improve efficiency and reduce manual configuration when scaling resources to support rapidly changing workload requirements. Karpenter is a notable example of such a third-party tool. Originally developed by Amazon Web Services (AWS), Karpenter is open-source software that was donated to the Cloud Native Computing Foundation (CNCF). In December of 2023, Microsoft announced support for Karpenter in preview mode for use with Azure Kubernetes Service (AKS) as part of a functionality called node auto-provisioning (NAP).

In this article, we focus on the fundamentals of running Karpenter on AKS, provide examples of how to get started, and dive deeper into NAP for AKS.

Summary of AKS Karpenter concepts

Karpenter overview #

Karpenter is designed to work with any Kubernetes cluster regardless of its environment, including cloud providers and on-premises configurations. Karpenter offers new features, such as dynamic instance types, graceful handling of interrupted instances, and improved scheduler times.

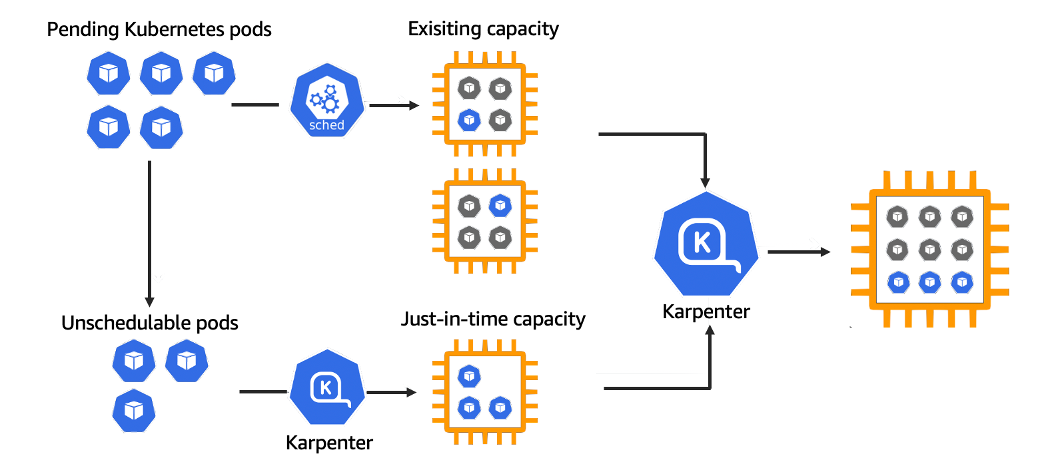

Karpenter observes the events within a Kubernetes cluster and sends commands to the cloud provider. It detects new pods, evaluates their scheduling constraints, and provisions nodes based on the requirements. New pods are then scheduled on the newly provisioned nodes. Karpenter removes nodes when no longer needed, minimizing scheduling latencies and infrastructure costs.

Karpenter uses custom resources called NodePools that specify the resources that Karpenter should provision, such as nodes or virtual machines. Whenever an application requires additional resources, Karpenter actively checks the provisioners to see if any new ones need to be created. A provisioner is aware of the constraints affecting the nodes and their attributes. Its job includes:

- Defining taints for the limitations of pods that run on Karpenter’s nodes

- Defining the limitations of node creation (i.e., instance types, zones, and OS)

- Defining node expiration timers

The node auto-provisioning (NAP) feature in AKS, which is based on Karpenter and has been in public preview since December 2023, aims to reduce the burden of designing node pool configurations before deploying workloads. In addition to consolidation, which is a significant part of NAP, it is responsible for rescheduling workloads to the correct sizes of virtual machines, which reduces the running costs for your applications.

Karpenter and node auto-provisioning (NAP) on Azure #

NAP is a new Azure feature based on the Karpenter project and the Azure Kubernetes Service (AKS). NAP determines the optimal VM configuration based on pending pod resource requirements. Note that this feature is still in preview as of writing this article and hasn’t reached general availability yet.

NAP is most useful when workloads become more complex, making resource requests and VM configurations more difficult. NAP automatically deploys, configures, and manages Karpenter on AKS clusters.

NAP vs. self-hosted modes #

Node auto-provisioning offers automatic scaling, updates, and maintenance, reducing operational overhead and integrating seamlessly with cloud-native services. In contrast, self-hosted node management solutions require manual management, providing more control over configurations and greater potential cost savings, but they demand greater expertise, time, and resources to maintain.

In NAP mode, Karpenter is run by AKS as an add-on, closely resembling a managed cluster autoscaler. Most users will benefit from this mode because it removes the overhead and complexity of manual configuration design.

On the other hand, in the self-hosted mode, Karpenter is run as a standalone deployment within a cluster. Self-hosted mode is suitable for advanced users who require more granular customization of Karpenter’s deployment and complete control over the automation of their clusters.

In the following sections we will walk through the processes of enabling NAP for both new and existing AKS clusters, illustrating the practical steps and considerations involved. These tutorials aim to clarify the setup procedures and highlight the benefits and challenges associated with each mode.

Running NAP with new and existing AKS clusters #

To utilize NAP as a managed add-on, you must have the following:

- An Azure subscription

- Azure CLI installed

- The aks-preview extension installed (version >= 0.5.170)

- The NodeAutoProvidioningPreview feature flag registered

The process is well documented in the official learn.microsoft.com documentation and can be found here with instructions for both Azure CLI and JSON format for ARM templates. A brief guide for the process using the AZ CLI is as follows.

First, install the aks-preview CLI extension, making sure you are on the latest version:

az extension add --name aks-preview

az extension update --name aks-previewNext, use the az feature register command to register the NodeAutoProvisioningPreview feature and verify the registration status. Then refresh the Microsoft.ContainerService provider by running the following commands:

az feature register --namespace "Microsoft.ContainerService" --name "NodeAutoProvisioningPreview"

az feature show --namespace "Microsoft.ContainerService" --name "NodeAutoProvisioningPreview"

az provider register --namespace Microsoft.ContainerServiceYou should be aware that the registration process for the NodeAutoProvisioningPreview feature might take a few minutes.

After successfully installing the extension, registering the feature, and refreshing the container service provider, you can enable auto-provisioning on a new or existing AKS cluster.

New cluster node auto-provisioning

Enabling NAP on a new cluster is as simple as defining the correct network plugin mode and dataplane arguments and setting NAP to auto. To do so, execute the AZ CLI command for the creation of a new cluster with the following arguments:

az aks create \

--name a_name_of_your_choice \

--resource-group the_resource_group_where_the_cluster_belongs_to \

--node-provisioning-mode Auto \

--network-plugin azure \

--network-plugin-mode overlay \

--network-dataplane cilium \

--generate-ssh-keysThis will create a new cluster with the node-provisioning-mode enabled and set to auto.

Existing cluster node auto-provisioning

Enabling NAP on existing clusters has been a long-anticipated improvement since node auto-provisioning became available in AKS.

Start by confirming that the aks-preview extension is installed by running the following command:

az extension list | grep aks-previewWith the extension installed, the registration procedure must be followed as described earlier in this article

Now, to enable NAP on your existing cluster, run the following:

az aks update \

--name the_name_of_your_cluster \

--resource-group the_resource_group_where_the_cluster_belongs_to\

--node-provisioning-mode Auto \

--network-plugin azure \

--network-plugin-mode overlay \

--network-dataplane cilium \

--generate-ssh-keys

Wait for the update command to finish, and look into Kubernetes API resources to verify that the custom resource definitions are present by running the kubectl api-resources command for references to karpenter.sh.

With node-provisioning-mode set to auto, you must disable the user mode, which, in technical terms, translates to scaling it to 0. At this point, you need to remember to disable the cluster autoscaler if it was previously enabled for your node pools; this should be done before scaling to 0.

To disable the cluster-autoscaler:

az aks nodepool update \

--name name_of_node_pool \

--name name_of_cluster \

--resource-group the_resource_group_where_the_cluster_belongs_to \

--disable-cluster-autoscalerTo scale your user node pool to 0:

az aks nodepool scale \

--name name_of_node_pool \

--name name_of_cluster \

--resource-group the_resource_group_where_the_cluster_belongs_to \

--no-wait \

--node-count 0Within moments, you should see Karpenter in action in the events and when listing your pods using kubectl.

Installing Karpenter using Helm and creating your first NodePool #

With an AKS cluster in place, you can use the shell script provided by the Karpenter Provider for Azure repository to make the initial YAML template. You can use curl to retrieve and execute the script as follows:

curl -sO https://raw.githubusercontent.com/Azure/karpenter-provider-azure/main/hack/deploy/configure-values.sh

chmod +x ./configure-values.sh && ./configure-values.sh name_of_cluster the_resource_group karpenter-sa karpentermsiReplace name_of_cluster and the_resource_group with their respective appropriate values for your environment.

The commands above will generate the initial YAML file for your Helm configuration.

Now, you only need to set your environment variables to their right values:

export CLUSTER_NAME=name_of_your_cluster

export RG=name_of_your_resource_group

export LOCATION=location_of_your_resources

export KARPENTER_NAMESPACE=your_kubernetes_namespace

export KARPENTER_VERSION=0.5.0This is followed by configuring the NodePool resource in the YAML file:

apiVersion: karpenter.sh/v1beta1

kind: NodePool

metadata:

name: default

spec:

disruption:

consolidationPolicy: WhenUnderutilized

expireAfter: Never

template:

spec:

nodeClassRef:

name: default

# Requirements that constrain the parameters of provisioned nodes.

# These requirements are combined with pod.spec.affinity.nodeAffinity rules.

# Operators { In, NotIn, Exists, DoesNotExist, Gt, and Lt } are supported.

# https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#operators

requirements:

- key: kubernetes.io/arch

operator: In

values:

- amd64

- key: kubernetes.io/os

operator: In

values:

- linux

- key: karpenter.sh/capacity-type

operator: In

values:

- on-demand

- key: karpenter.azure.com/sku-family

operator: In

values:

- DThen run the following commands to apply the help configuration based on the YAML content:

helm upgrade --install karpenter oci://mcr.microsoft.com/aks/karpenter/karpenter \

--version "${KARPENTER_VERSION}" \

--namespace "${KARPENTER_NAMESPACE}" --create-namespace \

--values karpenter-values.yaml \

--set controller.resources.requests.cpu=1 \

--set controller.resources.requests.memory=1Gi \

--set controller.resources.limits.cpu=1 \

--set controller.resources.limits.memory=1Gi \

--wait

kubectl logs -f -n "${KARPENTER_NAMESPACE}" -l app.kubernetes.io/name=karpenter -c controllerThe examples above are simply guides to get you started with a working proof of concept. For detailed documentation and guidelines, navigate to the official repository for the project.

Karpenter Limitations on AKS #

Karpenter on Azure Kubernetes Service has several limitations:

- Using Karpenter on AKS is subject to Azure Resource Manager limits, restricting resources like virtual machines, disks, and IP addresses.

- Karpenter doesn’t support mixed-instance types within a single node group, limiting its cost-optimization flexibility.

- The availability of specific VM types varies by Azure region, affecting its effectiveness.

- Integrating Karpenter with AKS’s managed services and security features, such as managed identities, can be complex and requires careful configuration and extensive experience with Azure.

- Only AKS clusters with CNI overlay and Cilium data plane are supported. The latter impacts organizations that utilize other networking solutions, like Flannel, Calico or kube-router. Operating system support is also limited, with Linux being the only OS supported by the nodes.

Finally, Karpenter’s integration with AKS using node auto-provisioning (NAP) operates only at the cluster level, leaving the Kubernetes administrators responsible for pod autoscaling. However, horizontal and vertical pod autoscaling are interdependent and can be challenging to accurately and continuously configure manually in large environments.

In the next section, we introduce StormForge’s Optimize Live as a natural complement to Karpenter, designed to automate Kubernetes resource management using machine learning algorithms to continuously rightsize workloads in harmony with horizontal pod autoscaling (HPA).

Complementing AKS Karpenter with automated rightsizing #

With Karpenter caring for your worker nodes, it’s time to consider your application scaling needs. StormForge Optimize Live continuously rightsizes workloads using machine learning and automation. Optimize Live adjusts CPU and memory requests based on actual usage data, significantly reducing resource waste, cloud costs, and application performance.

Optimize Live vertically adjusts resource requests and limits based on usage patterns, integrating seamlessly with Kubernetes’ Horizontal Pod Autoscaler, which scales pods horizontally to handle sudden spikes in demand. Simply put, it ensures that your applications are provisioned with the right resources, leading to significant cost savings.

While Karpenter and Optimize Live can be used independently, the real value comes from combining them. That way, you get rightsized pods with the most efficient placement, resulting in significant cloud cost savings.

You can learn more about Optimize Live on the product page, and try it out by signing up for a free trial or interacting with the user interface in the sandbox environment.

Conclusion #

Effective management of Kubernetes clusters is necessary for optimizing resource utilization, improving efficiency, and reducing costs. However, manual cluster and pod autoscaling configurations can be tedious and inaccurate. By leveraging tools such as Karpenter and StormForge, organizations can implement automation with proper constraints to utilize node pools in Azure and continuously monitor and adjust resources to ensure application stability and efficiency. With the right strategies in place, businesses can balance cost efficiency and application stability while meeting the demands of varying workloads.