Chapter 6 - Karpenter vs Cluster Autoscaler

The Kubernetes Cluster Autoscaler (CAS) and Karpenter are two Kubernetes node autoscaling tools currently available on the market. CAS operates primarily through the use of autoscaling groups and assumes that all instance types within a node group are identical. This approach typically involves creating multiple node groups to accommodate different instance types, often leading to a proliferation of node groups, especially in larger clusters. This node-centric strategy, while functional, introduces complexity and potential inefficiencies in scaling dynamics.

Karpenter moves away from the one-size-fits-all approach of node groups that characterizes traditional autoscalers like CAS. This shift allows for a more tailored management of node provisioning and better alignment of resources with particular workload requirements. Another prominent feature is its sophisticated node consolidation capabilities, which optimize resource usage and reduce costs. Additionally, Karpenter is known for its faster node startup times and superior support for spot instances.

In this article, we compare these two options by reviewing the relevant concepts in detail.

Summary of key concepts related to the Karpenter vs. Cluster Autoscaler comparison #

What is the Cluster Autoscaler? #

The Cluster Autoscaler (CAS) is a tool designed to automatically adjust the size of a Kubernetes cluster based on resource needs. It monitors pending pods and scales up or down accordingly. The CAS continuously monitors the API server for unschedulable pods and creates new nodes to host them. It also identifies underutilized nodes and removes them after migrating pods to other nodes.

While it technically supports multiple node configurations, managing this variety can introduce complexity. It’s often more straightforward to maintain a single node type for scaling, considering that every next instance type will require a new autoscaling group.

What are the limitations of Cluster Autoscaler? #

While CAS manages to perform its core functions effectively, it is not without its drawbacks and limitations. Although CAS can scale up quickly, it downscales by removing one node at a time with a set delay. This can mean a slow return to normal levels after a surge, prolonging the period of potentially underutilized resources. Let’s review some of the other limitations.

Autoscaling-group-centered strategy

The CAS’s one-size-fits-all approach means that many pods may not fit well on the nodes, leading to less efficient resource utilization and often resulting in overprovisioning. The flexibility in configuring node groups with Cluster Autoscaler is limited, as each group typically consists of a single instance type. While it is possible to create multiple node groups to support various workloads, this significantly increases complexity and management overhead.

One example is the need to combine on-demand and spot instances within the same group. This scenario isn’t supported in CAS and would require maintaining multiple autoscaling groups and implementing additional logic to govern them. Such configurations can become cumbersome and inefficient to maintain over time.

Limited downscaling capabilities

Downscaling in the Cluster Autoscaler introduces its own complexities, requiring additional configurations and sometimes external tools to manage effectively. This process is slow due to the cautious one-node-at-a-time approach, but it also requires meticulous tuning to avoid resource waste and align with the fluctuating demands of applications.

The downscaling strategy of Cluster Autoscaler (CAS) is limited by its method of assessing only one node at a time, which restricts its ability to effectively consolidate resources. For instance, in a scenario where there are 20 nodes each at 70% capacity, CAS might not consolidate any nodes, as its approach doesn't aggregate overall cluster capacity.

In contrast, Karpenter can evaluate the cluster more holistically, potentially consolidating these nodes into 15 more fully utilized ones, thereby enhancing efficiency and reducing operational costs.

What is Karpenter? #

Karpenter is an open-source autoscaler for Kubernetes designed to improve the efficiency and responsiveness of cluster scaling. Karpenter provides a modern approach to autoscaling Kubernetes nodes. It interacts directly with cloud provider APIs, enabling responsive and flexible instance provisioning while leveraging native cloud provider features like spot instances. Karpenter also provides intelligent features for optimizing resource efficiency and reducing costs.

Karpenter’s architecture deploys a just-in-time approach, rapidly provisioning the right types of nodes as soon as applications require them. This method allows for quicker adaptation to changing workload demands than traditional autoscalers.

Karpenter introduces concepts like NodePools and NodeClasses, which provide fine-grained control over infrastructure provisioning. These features enable administrators to specify detailed cloud-specific settings and integrate custom scripts, enhancing the ability to tailor resources precisely to the needs of different workloads. This level of control streamlines the management of diverse and dynamic environments.

Karpenter also supports advanced consolidation mechanisms such as empty-node, multi-node, and single-node consolidation. These strategies optimize resource usage by consolidating workloads and minimizing idle capacity. Additionally, Karpenter offers spot-related strategies like spot-to-spot transitions, which enhance cost-effectiveness and resource utilization by leveraging spot instances more efficiently during autoscaling activities.

Take a deeper dive into Karpenter, including a tutorial and best practices, in our dedicated Karpenter article.

How does Karpenter improve on the Cluster Autoscaler? #

Karpenter enhances the capabilities of the Cluster Autoscaler by increasing flexibility (because it does not take a one-size-fits-all approach) while providing more efficient consolidation and better support for spot instances. Let’s review these advantages in more detail.

Greater flexibility for diverse workloads

Workloads often exhibit vastly different resource consumption profiles. While the Cluster Autoscaler offers node groups, they may not provide the granularity needed to cater to all workloads efficiently. Karpenter’s highly customizable provisioners empower you to address this complexity head-on by:

- Defining instance types to match specific workload needs: CPU-heavy, memory-bound, or GPU-accelerated

- Targeting availability zones based on workload placement preferences

- Leveraging spot instances opportunistically for cost-sensitive workloads

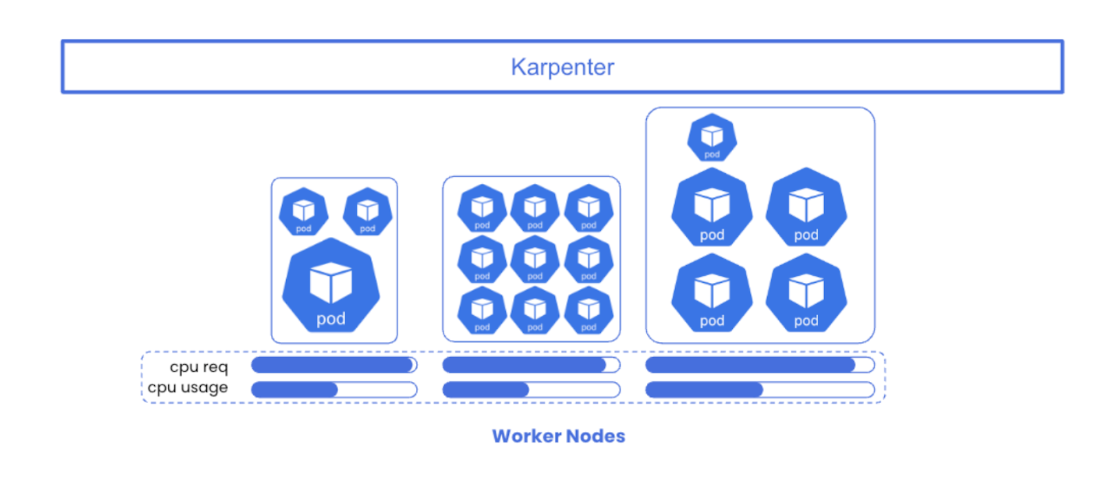

Node consolidation

Karpenter stands out for its resource consolidation capabilities, designed to enhance infrastructure efficiency. The consolidation feature dynamically adjusts and groups resources to reduce idle times and operational costs. These are the types of consolidation that Karpenter performs:

- Empty-node consolidation: Consolidates tasks from underutilized nodes to optimize space

- Multi-node consolidation: Combines resources from multiple nodes when it detects overlapping capabilities

- Single-node consolidation: Focuses on maximizing usage of a single node before leveraging additional nodes

These strategies are part of a broader set of sophisticated algorithms that Karpenter uses, including node downscaling controls and other advanced management features. For a deeper dive into these mechanisms and their practical applications, you can find more information in the separate article about consolidation.

Karpenter Support for Spot Instances

Support for Spot instances is one of the most prominent features of Karpenter. Let’s dive into that a little bit deeper:

Integration and Dynamic Provisioning of Spot Instances

Karpenter optimizes the utilization of EC2 Spot Instances by employing a diversified instance selection strategy. This strategy is built around Karpenter’s ability to evaluate and binpack pending pods across multiple instance types and sizes, selecting the most suitable and cost-effective Spot pools. By utilizing attributes like karpenter.k8s.aws/instance-category and karpenter.k8s.aws/instance-size, Karpenter can dynamically adjust the cluster's node composition based on the aggregated resource demands of the applications, enhancing both cost-efficiency and scalability.

Fallback to On-Demand Instances

Karpenter is designed to automatically fall back to On-Demand Instances when Spot Instances are either unavailable or not feasible due to cost or capacity constraints. This mechanism ensures high availability of resources by leveraging the price-capacity-optimized allocation strategy, which focuses on minimizing interruptions and optimizing costs. Karpenter handles this fallback through its configuration, where it specifies Spot as the preferred capacity type but allows for an immediate switch to On-Demand to prevent any potential service disruption.

Utilization of AWS Node Termination Handler

In addition to its robust Spot management strategies, Karpenter can be combined with the AWS Node Termination Handler to enhance the resilience of workloads running on Spot Instances. This integration enables Karpenter to gracefully handle Spot Instance interruptions by detecting termination notices and proactively rescheduling the affected pods. The Node Termination Handler ensures that the pods are safely evicted and rescheduled before the Spot Instance is reclaimed by AWS, thereby maintaining the continuity and availability of services.

Spot-to-Spot Consolidation

Spot-to-Spot consolidation in Karpenter is a sophisticated feature that aims to optimize the usage of Spot Instances by consolidating workloads onto fewer nodes when possible. This feature is particularly useful in scenarios where Spot Instances are underutilized. Karpenter’s consolidation strategy assesses the cluster's current Spot Instance utilization and proactively replaces underutilized nodes with more cost-effective Spot configurations, ensuring optimal resource utilization and cost savings.

Karpenter challenges #

While Karpenter certainly addresses several limitations, it has a few of its own considerations to take into account.

Limited cloud support

Currently, Karpenter is only available on AWS and Azure, limiting its accessibility and integration with other cloud platforms. Here are the alternative options for a few other popular clouds:

- Google Cloud Platform (GCP): Users can leverage the Google Kubernetes Engine (GKE), which includes its own implementation of the Cluster Autoscaler. GKE’s Autopilot mode introduces a functionality similar to Karpenter’s managed autoscalers and also implements automated node provisioning and management.

- IBM Cloud: IBM offers autoscaling capabilities through its Kubernetes Service, which can be managed via the IBM Cloud console or through the IBM Cloud Kubernetes Service Autoscaler Helm chart.

- DigitalOcean: The platform provides a Cluster Autoscaler feature within its Managed Kubernetes service.

- Oracle Cloud: Oracle offers Kubernetes autoscaling through its Oracle Container Engine for Kubernetes (OKE) leveraging Cluster Autoscaler as well.

Alignment with pod resource requirements

To fully harness Karpenter’s capabilities, it’s crucial that pods have accurately defined resource requirements, such as CPU and RAM. Fine-tuning these specifications ensures that Karpenter can efficiently allocate the right resources, matching workload demands closely to minimize waste and optimize performance.

Here are a few scenarios outlining what might happen if a fine-tuned setup of CPU and memory requirements is missing:

- Resource overprovisioning: Without defined CPU and memory requests, Karpenter may provision nodes that are too large for the actual needs, leading to unnecessary costs due to overprovisioning resources.

- Resource underprovisioning and performance issues: Conversely, if Karpenter does not have accurate resource requests, it might provision nodes that are too small, causing performance issues due to insufficient resources being available for the workload.

- Frequent node replacements: Karpenter could continuously replace nodes in an attempt to find the right fit for the workload demands, increasing operational overhead and potentially causing disruptions during node replacement.

- Cost Inefficiencies: Karpenter aims to optimize costs by provisioning exactly what is needed based on pod specifications. Undefined limits or requests can lead to suboptimal node provisioning, unnecessarily increasing cloud expenditures.

Harmonizing Karpenter and StormForge #

Managing CPU and memory requests and limits becomes a huge burden for Kubernetes administrators at a certain stage of growth. Both CAS and Karpenter rely on the requests being set on pods. This is where StormForge Optimize Live comes in to bridge those gaps.

Karpenter and Optimize Live work together to maximize Kubernetes cluster efficiency, addressing the challenge of finely tuned CPU and memory requests and limits—a requirement for effective Karpenter operation.

Integrating Karpenter with StormForge can significantly enhance cost optimization and performance efficiency in Kubernetes environments. By pairing Karpenter’s dynamic node provisioning capabilities with StormForge’s autonomous rightsizing for Kubernetes workloads, clusters can better manage resources and reduce costs. Read more about autonomous cost optimization with Karpenter and StormForge.

You can see how StormForge Optimize Live enhances Karpenter’s efficiency by starting a free trial of Optimize Live, or playing around in the sandbox environment.

Conclusion #

Both the Cluster Autoscaler and Karpenter offer valuable solutions for managing Kubernetes node scaling, each taking a distinct approach. CAS, with its reliance on autoscaling groups, operates under the assumption that nodes within a group are uniform, necessitating multiple groups for different instance types. This method, while effective, can lead to complications and inefficiencies, particularly in larger clusters.

In contrast, Karpenter simplifies these complexities by avoiding a one-size-fits-all approach, allowing for more precise node provisioning, and reducing unnecessary resource allocation through advanced consolidation techniques. Additionally, its capabilities for faster node startup and superior support make it a compelling choice for modern Kubernetes environments seeking efficient and cost-effective scaling solutions.